Financial Results:

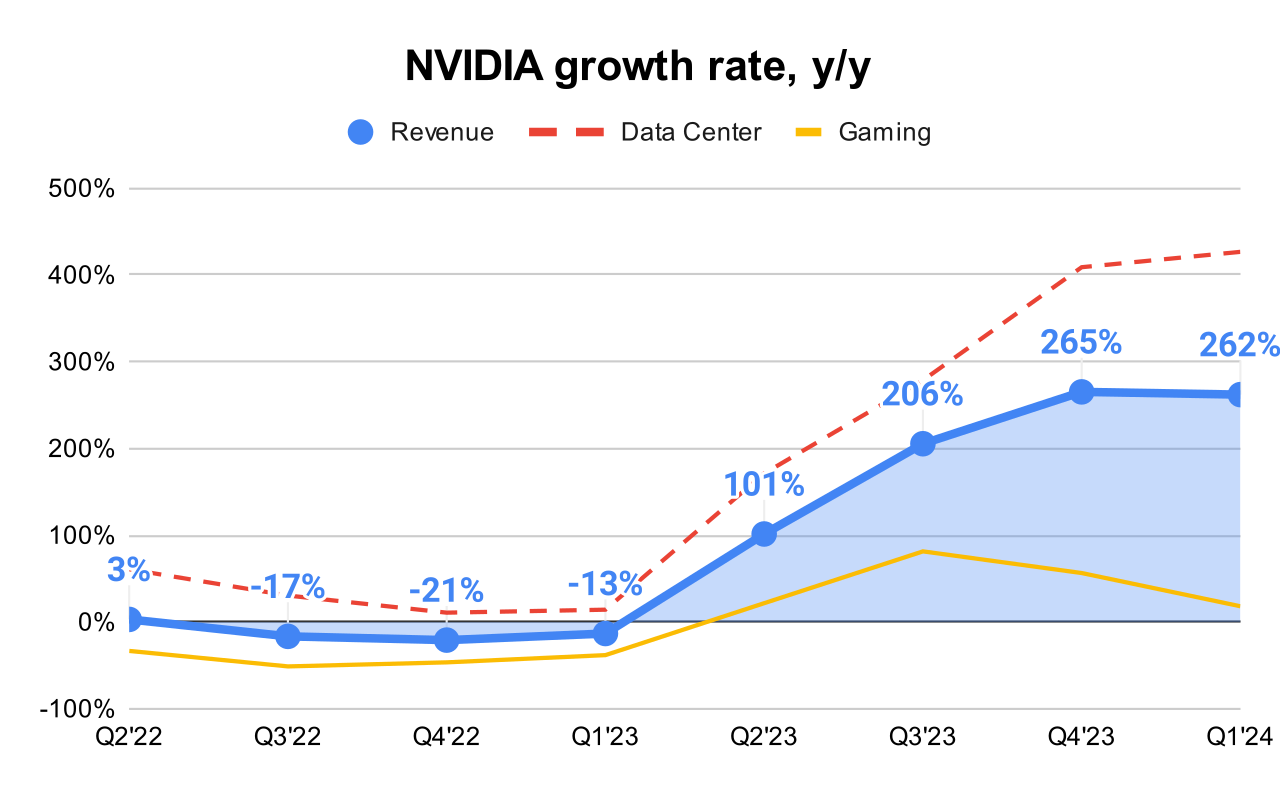

↗️$26.0B rev (+262.1% YoY, +265.3% LQ) beat est by 6.0%

↗️GM (78.4%, +13.7%pp YoY)🟢

↗️EBIT Margin (69.3%, +26.9%pp YoY)🟢

↗️FCF Margin (57.3%, +20.6%pp YoY)🟢

↗️Net Margin (57.1%, +28.7%pp YoY)🟢

↗️EPS $6.12 beat est by 9.9%🟢

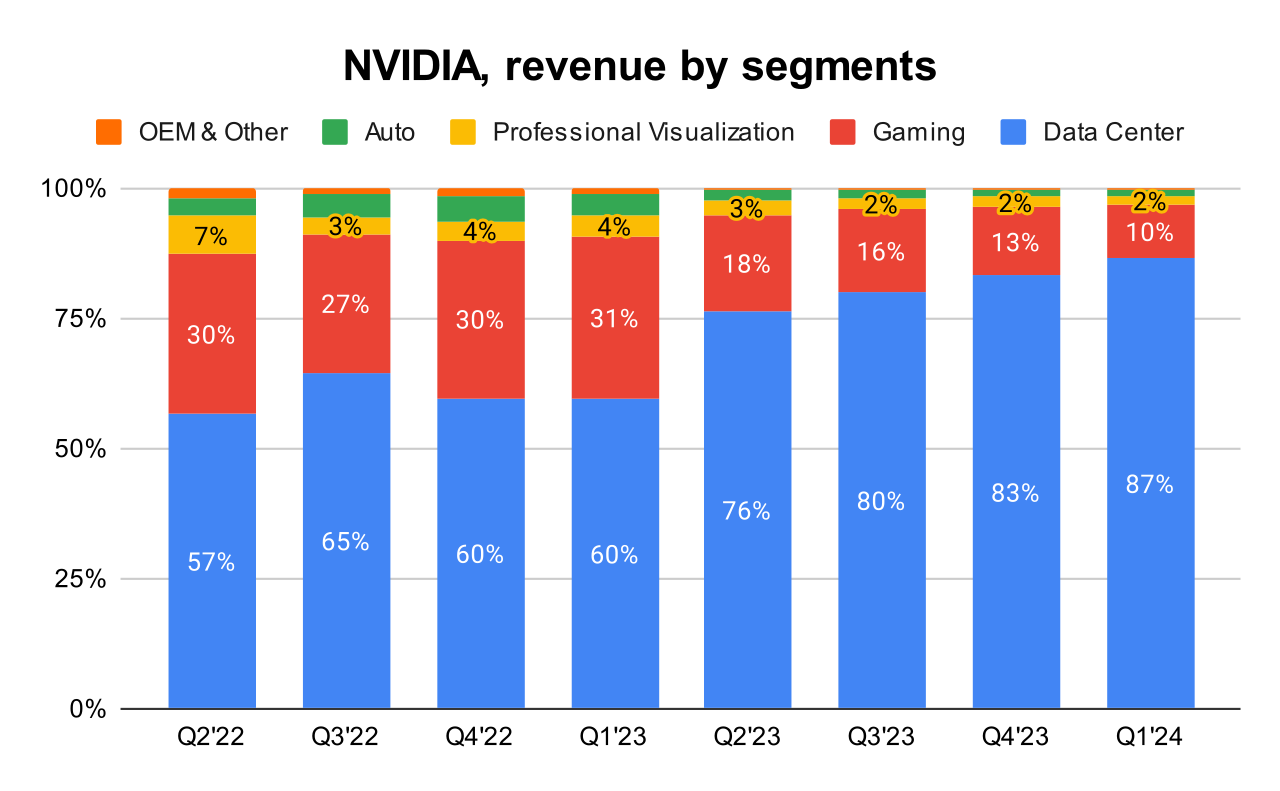

Segment Revenue

↗️Data Center $22,563M rev (+426.7% YoY)🟢

➡️Gaming $2,647M rev (+18.2% YoY)🟡

➡️Professional Visualization $427M rev (+44.7% YoY)🟡

➡️Auto $329M rev (+11.1% YoY)🟡

➡️OEM & Other $78M rev (+1.3% YoY)🟡

Operating expenses

↘️S&M+G&A/Revenue 3.0% (3.2% LQ)

↘️R&D/Revenue 10.4% (11.2% LQ)

Dilution

↘️SBC/rev 4%, -0.6%pp QoQ

↘️Basic shares down -0.3% YoY, -0.4%pp QoQ🟢

↗️Diluted shares down 0.0% YoY, +0.1%pp QoQ🟢

Guidance

↗️Q2'24 $28.0B guide (+107.3% YoY) beat est by 5.4%

Key points from Nvidia’s First Quarter 2024 Earnings Call:

Record Financial Performance

NVIDIA reported a Q1 revenue of $26 billion, marking an 18% sequential increase and a 262% year-on-year increase, which surpassed their forecast of $24 billion.

Data Centers

The robust growth in Data Center revenue was primarily driven by the continued demand for NVIDIA's Hopper GPU computing platform. Enterprises and consumer internet companies significantly contributed to this growth. Large cloud providers and enterprises, such as Tesla and Meta, have significantly increased their use of NVIDIA's technology, with Meta training their Llama 3 model on a cluster of 24,000 H100 GPUs, and Tesla expanding their AI training cluster to 35,000 H100 GPUs.

Product Innovations - Hopper and H200 GPUs

Hopper GPU: Continues to see strong demand. It has been crucial in driving significant growth in NVIDIA's data center revenue.

H200 GPU: A new introduction that nearly doubles the inference performance of the H100. It is on track for Q2 shipments and has already been used by OpenAI to power demonstrations of their GPT-4o model.

Networking Innovations: The new Spectrum-X Ethernet networking solution was also introduced, optimized for AI and expected to become a multibillion-dollar product line within a year.

Generative AI Advancements

Impact on Cloud Providers: Training and inferencing AI on NVIDIA CUDA has accelerated cloud rental revenue growth. For every $1 spent on NVIDIA AI infrastructure, cloud providers can potentially earn $5 in GPU instant hosting revenue over four years.

Inference Capabilities: NVIDIA has improved the performance of LLM inference on their H100 GPUs by up to 3x due to CUDA algorithm innovations, translating into a 3x cost reduction for serving models like Llama 3.

Generative AI Applications: Companies like Meta are using NVIDIA GPUs for tasks such as powering their new AI assistant across platforms like Facebook, Instagram, WhatsApp, and Messenger through their Llama 3 model.

Blackwell GPU Architecture

Introduction and Capabilities: Announced at GTC in March, Blackwell delivers up to 4x faster training and 30x faster inference than the H100, enabling real-time generative AI on trillion-parameter models.

Deployment and Adoption: Expected to be available in over 100 OEM and ODM systems at launch, indicating broad and rapid adoption across various customer types and data center environments. This architecture supports diverse configurations including air cooling, liquid cooling, and integration with x86 and Grace CPUs.

Contribution to AI Factories: Forms the foundation for next-generation AI factories aimed at producing AI on an industrial scale, which significantly boosts NVIDIA's ability to offer complete solutions for AI data centers.

Geographical Expansion

Sovereign AI: NVIDIA is facilitating the build-out of sovereign AI capabilities globally, with countries investing in domestic AI computing capacities. Examples include Japan, France, Italy, and Singapore investing in building powerful AI infrastructure using NVIDIA technologies.

China Market: NVIDIA has adapted to new export control restrictions by developing new products specifically for China, aiming to maintain competitiveness in a challenging market environment.

Automotive

Growth was driven by AI cockpit solutions and self-driving platforms, including support for Xiaomi’s new electric vehicle using NVIDIA’s AI car computer.

Announced new design wins for NVIDIA DRIVE Thor, indicating NVIDIA's deepening integration into automotive AI and self-driving technologies.

Dividend and Stock Split

NVIDIA announced a 10-for-1 stock split effective June 10th and a 150% dividend increase, reflecting their strong financial position and confidence in continued growth.

Management comments on the earnings call.

Product Innovations and Future Roadmap

Jensen Huang, CEO: "The Blackwell platform is in full production and forms the foundation for trillion-parameter scale generative AI. The combination of Grace CPU, Blackwell GPUs, NVLink, Quantum, Spectrum, mix and switches, high-speed interconnects and a rich ecosystem of software and partners let us expand and offer a richer and more complete solution for AI factories than previous generations."

Customers

Colette Kress, CFO: "Leading LLM companies such as OpenAI, Adept, Anthropic, Character.AI, Cohere, Databricks, DeepMind, Meta, Mistral, xAI, and many others are building on NVIDIA AI in the cloud."

Generative AI

Jensen Huang, CEO: "From today's information retrieval model, we are shifting to an answers and skills generation model of computing. AI will understand context and our intentions, be knowledgeable, reason, plan and perform tasks."

Spectrum-X Ethernet

Jensen Huang, CEO: "We started shipping our new Spectrum-X Ethernet networking solution optimized for AI from the ground up. It includes our Spectrum-4 switch, BlueField-3 DPU, and new software technologies to overcome the challenges of AI on Ethernet to deliver 1.6x higher networking performance for AI processing compared with traditional Ethernet."

Automotive

Colette Kress, CFO: "We supported Xiaomi in the successful launch of its first electric vehicle, the SU7 sedan built on the NVIDIA DRIVE Orin, our AI car computer for software-defined AV fleets. We also announced a number of new design wins on NVIDIA DRIVE Thor, the successor to Orin, powered by the new NVIDIA Blackwell architecture with several leading EV makers, including BYD, XPeng, GAC's Aion Hyper and Neuro."

Demand

Jensen Huang, CEO: "The demand for GPUs in all the data centers is incredible. We're racing every single day... There's also a long line of generative AI startups, some 15,000, 20,000 startups that in all different fields from multimedia to digital characters, of course, all kinds of design tool application -- productivity applications, digital biology, the moving of the AV industry to video, so that they can train end-to-end models, to expand the operating domain of self-driving cars."